Before “reviewing” Section 230, the Biden admin needs to listen to human rights groups about the dangers of changing it

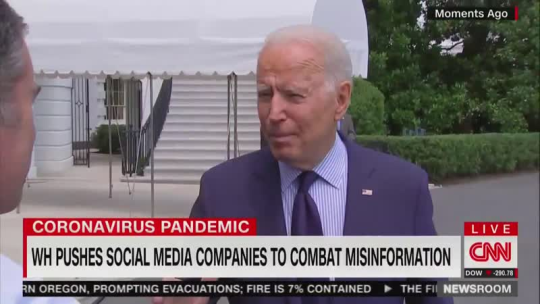

Yesterday, White House Communications Director Kate Bedingfield said on MSNBC, “Certainly [platform companies] should be held accountable,” as the Biden administration says it is “reviewing Section 230” to combat vaccine misinformation on Facebook. Here’s our statement in response, which can be attributed to Evan Greer, Director, Fight for the Future (she/her pronouns).

Before they pursue any haphazard changes to Section 230, the Biden/Harris administration needs to show that they’re actually listening to concerns consistently raised by human rights and civil liberties experts for years. This includes our letter campaign addressed to the administration and co-signed by 70+ racial justice, LGBTQ, civil liberties, and harm reduction groups warning that uncareful changes to Section 230 would endanger free expression and human rights for marginalized people. Some of these groups included Access Now, Data for Black Lives, Muslim Justice League, Sex Workers Outreach Project, and the Wikimedia Foundation.

While nobody is denying the prevalence of harmful misinformation around the pandemic and vaccines, attacking Section 230 will actually make it harder for platforms to remove harmful-but-not-illegal content, and in the process it will also threaten human rights and free expression for marginalized people. Instead, we support strong federal data privacy legislation––which would severely limit the ability of companies like Facebook to microtarget misinformation directly to the people most susceptible to it––robust antitrust enforcement, and the restoration of net neutrality, to strike at the roots of monopoly power and surveillance capitalism. We’ve also called for an outright ban on surveillance-based advertising, and immediate industry-wide moratorium on non-transparent forms of algorithmic manipulation of content and newsfeeds. We don’t agree that more aggressive content moderation on its own will address the harms of Big Tech, and we fear that without structural changes, more aggressive platform moderation and content removal will disproportionately harm marginalized people and social movements.